Sora AI release date was on September 30, 2025, as OpenAI’s main text-to-video model.It first hit the US and Canada through the Sora app and website In just five days, Sora hit a million downloads and snagged the number one spot in the U.S The App Store is leading the way in mobile adoption, surpassing ChatGPT’s early efforts

Android support followed in November 2025 for the U.S., Canada, Japan, Korea, Taiwan, and Thailand, confirmed through coverage on sites such as TechCrunch and Android‑focused outlets. OpenAI’s own pages, including the Sora 2 launch post and the system card, frame this as the second major generation of the Sora model, tuned for more realistic physics, audio, and video control.

Analysts who track OpenAI release patterns (GPT‑4, DALL·E 3, and earlier Sora previews) point to a broader public access window between late November 2025 and early January 2026, based on reports from Skywork AI and Visla. Enterprise use through Microsoft’s Azure AI Foundry catalog and other partners adds a parallel “release track” for teams that already lean on managed cloud AI.

Table of Contents

What changed with Sora 2

Sora 2 upgrades the original Sora research model into a production‑ready tool that simulates gravity, collisions, and fluid behavior at a level that now scores around 8.5 out of 10 in independent physics tests, ahead of many rivals such as Runway Gen‑3 and Pika Labs. The model creates video and audio in sync, meaning the dialogue, background noise, and sound effects match the action in each scene, so we don’t need a separate system for sound

Video length and quality depend on the plan: Plus‑level access reaches about 5 seconds at 720p, and Pro access reaches roughly 20 seconds at 1080p, with frame rates between 24 and 60 fps and multiple aspect ratios. OpenAI documents these ranges in the Sora 2 feature guide from Sorato AI and in explainer posts from Comet and DataCamp, which highlight steerable camera moves, art styles, and more stable objects across frames.

Social and creative tools landed through October and November 2025 updates, including “character cameos” that let people turn themselves, their pets, or favorite items into reusable AI characters using reference video. News from TechCrunch, plus breakdowns on GLB GPT and Higgsfield, show how these cameos sit alongside basic editing, storyboards, channels, and faster feed performance.

Sora AI pricing, access, and Sora AI release date context

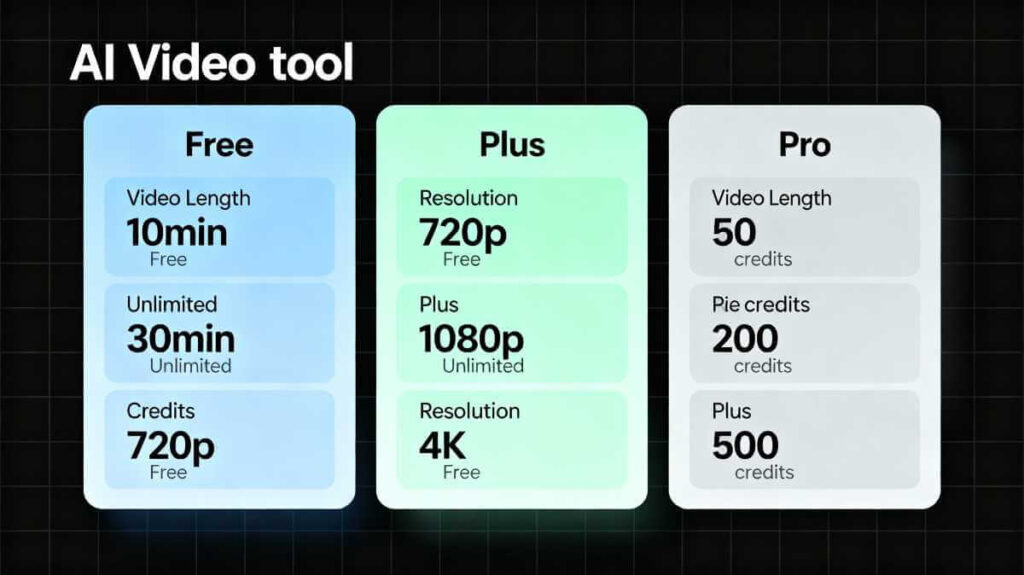

Pricing ties directly into how people experience the Sora AI release date, because access steps up through tiers rather than flicking on for everyone at once. Research from Eesel, Skywork AI, and GLB GPT lays out the current structure.

Sora 2 plans and limits

| Plan / Tier | Key access notes | Video limits (approx.) | Source links |

|---|---|---|---|

| Free (invite‑only) | Requires invite code, watermark on downloads, limited credits, no priority slot | Around 5 seconds at 720p, small monthly quota | Eesel, Skywork |

| ChatGPT Plus | Bundled access at about $20/month, lower quota, watermark on Sora output | Around 5 seconds at 720p, 1,000 credits/month | GLB GPT, Skywork |

| ChatGPT Pro | Around $200/month for creators and teams, watermark‑free option, priority | Up to about 20 seconds at 1080p, 10,000 credits/month | Eesel, Skywork |

OpenAI positions Sora 2 Pro inside the higher‑priced ChatGPT subscription tier, which combines Sora with GPT‑4‑level text models and advanced voice features. Guides from Eesel and Skywork AI outline credit counts, watermark behavior, and how Pro access opens the longer clips that many creative projects need.

Azure customers can tap into Sora 2 through asynchronous jobs rather than a live prompt box, as described in Microsoft’s Azure model catalog announcement and OpenAI‑focused API explainers from Toolsmart and Scalevise. That track gives teams a different Sora AI release date experience, since they access the model through infrastructure they already use.

Sora AI vs Runway, Pika, and Veo

Comparison guides such as Skywork’s multi‑tool breakdown, JuheAPI’s review, and Cursor’s Veo vs Sora overview give a structured look at Sora’s position in the current field. These sources, along with AI Competence and Lovart, tend to agree that Sora 2 leads on physics, audio sync, and cinematic feel, with trade‑offs around clip length, price, and region locks.

Sora 2 vs other text‑to‑video tools

| Feature | Sora 2 | Runway Gen‑3 | Pika Labs | Google Veo 3 |

|---|---|---|---|---|

| Quality focus | Cinematic, surreal‑real hybrid | Realistic, narrative storytelling | Stylized social clips | Strong motion for longer scenes |

| Physics accuracy | About 8.5/10 | Around 7/10 | Around 6/10 | Around 8/10 |

| Typical duration | 5–20 seconds | Around 10 seconds | 3–10 seconds | Up to several minutes (reported) |

| Max resolution | Around 1080p for Pro | Up to 4K | Up to 1080p | Around 1080p–4K range, depending on tier |

| Speed | Roughly 3–8 minutes per clip | Faster, especially with Turbo modes | About 30–90 seconds | Varies |

| Starting price | Around $200/month for Pro | Around $15/month basic | Around $10/month | Limited or tied to Google cloud stack |

| Audio handling | Native, synchronized audio‑video | Audio usually added afterwards | Basic sound | Strong sync across longer segments |

Data points for this table come from comparison articles on Comet, Toolsmart, JuheAPI, and Skywork AI. Sora AI release date discussions in those pieces tend to highlight how late‑2025 access lines up with a crowded video‑generation market rather than arriving in isolation.

Everyday workflow: Sora AI release date from a user’s seat

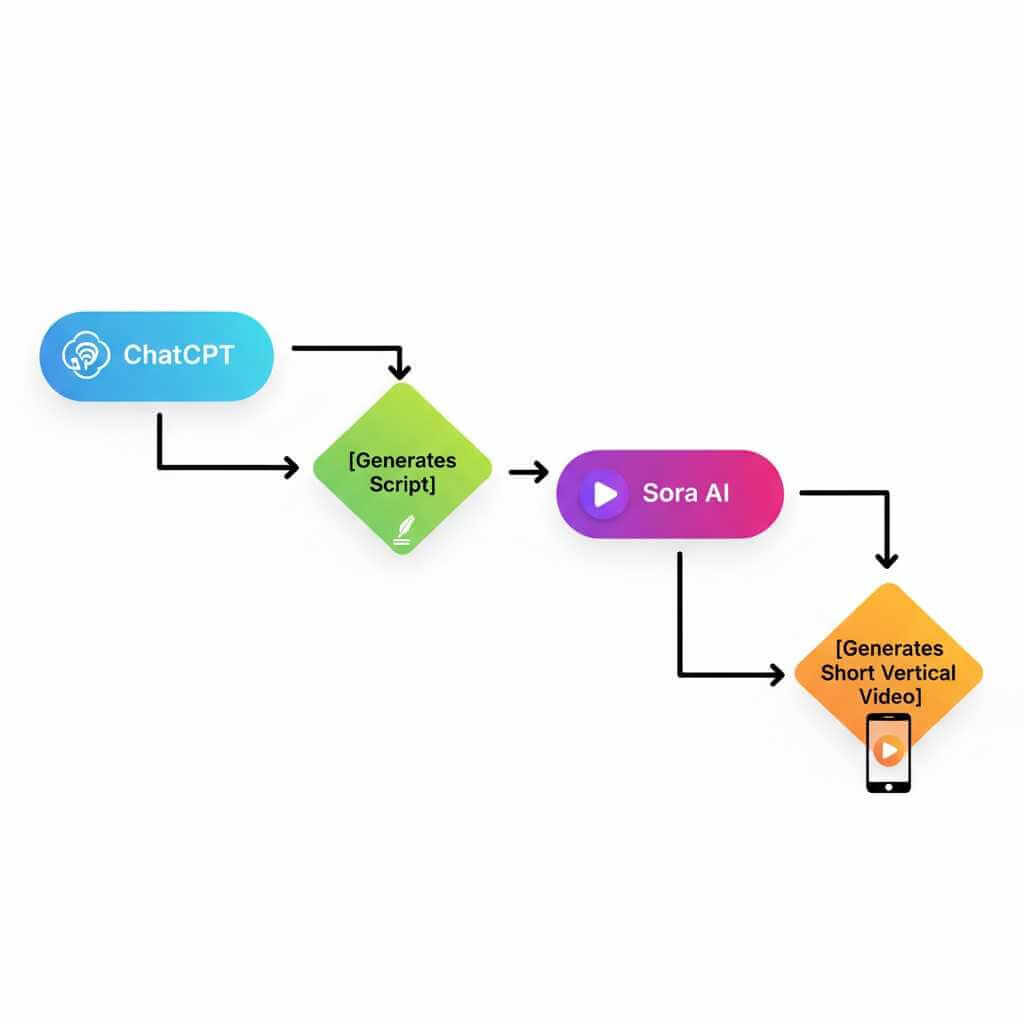

From the perspective of someone who leans on AI tools every day, Sora 2 sits next to ChatGPT rather than replacing it. A typical content session starts with ChatGPT for outlines, scripts, and shot lists, then moves into Sora for the parts that need moving pictures, especially short clips for social media posts, product explainers, or B‑roll.

Fro quick tech breakdowns, ChatGPT crafts the story and conversation; sora then turns that script into a 15- to 20-second video that fits right into a vertical video feed This combo feels more streamlined than the old way where you’d jump between different script tools, stock footage sites, and editing apps before anything even gets to the audience

Sora really shines in clips that play with physics or camera tricks: drones floating around, city views from above, slow-motion water scenes, or those intricate tracking shots that would normally need a ton of prep on a real set ChatGPT is still the go-to for research, planning, and writing, but once the scene’s clear enough, Sora steps in to handle prompts with setting, action, and camera directions

How to start using Sora AI after the Sora AI release date

The quickest path for individuals runs through the Sora app and soraapp.com, documented in guides from Skywork AI and OpenAI’s own help pages. New users sign in with an OpenAI account, pass age checks, and either redeem an invite code or join a waitlist while OpenAI expands capacity.

Prompt structure has a strong effect on results; breakdowns from Skywork’s “how to master Sora 2” guide and Higgsfield’s analysis suggest separating setting, subject, identity anchors, camera moves, mood, timing, and audio cues into clear phrases. Sora then processes the prompt over several minutes, and users can trim, remix, and blend clips using tools described in help content from OpenAI and tutorials on channels such as DataCamp and YouTube creators.

Teams that already run projects inside Azure can tap into Sora programmatically through Azure OpenAI endpoints, which accept job submissions and return completed clips later. Integration guides from Skywork and Lao Zhang’s blog cover key steps for connecting Sora AI release date access points to existing pipelines.

Limits, safety, and upcoming versions

Reports from reviewers and early users on Mashable, Parents.com, and OpenAI’s policy page highlight strict rules around realistic children, intimate content, and harmful scenarios. Outputs carry visible watermarks for free‑tier users, along with C2PA content‑credential metadata that some independent testers on LessWrong and Scalevise question for consistency.

Limits in the current release show up in clip length, resolution for free users, regional restrictions, and occasional flicker or blur, as described in pros‑and‑cons write‑ups from Skywork AI and Lao Zhang’s invite guide. Those sources tie Sora AI release date excitement to a need for media literacy and parental oversight, since realistic video synthesis raises cybersecurity and misinformation concerns at the same time as it speeds up creative work.

Roadmap coverage from YouTube analysts and DataCamp’s Sora blog points toward a likely Sora 3 release that stretches clip length toward 90 seconds or more, raises resolution to 4K, and strengthens character memory across shots. Those guesses draw on OpenAI’s earlier rollouts, along with the pace of competition from Google’s Veo line and Meta’s Vibes‑style video tools.

Sora AI FAQ

Q1. What is the Sora AI release date for the current version?

The current Sora model, often called Sora 2, launched on 30 September 2025 for users in the United States and Canada through the Sora app and web interface. Android access started rolling out later, first in North America and selected Asian markets during November 2025.

Q2. Is Sora AI available on Android and in my country yet?

Sora AI reached Android users in the U.S., Canada, Japan, Korea, Taiwan, and Thailand during its second rollout phase. Other regions still depend on a staged access plan, invite codes, or enterprise routes such as Azure OpenAI, so availability can differ by country.

Q3. Do I need ChatGPT Plus or Pro to use Sora AI?

Sora AI sits inside the broader OpenAI account system, and current plans link Sora access to ChatGPT subscriptions. Free and Plus users see shorter videos and watermarks, while Pro subscribers gain longer clips, higher resolution, more credits, and priority processing inside the same subscription.

Q4. How long can Sora AI videos be, and what quality can I expect?

Plus‑level access produces clips around 5 seconds at 720p, aimed at quick tests or simple social posts. Pro unlocks clips up to about 20 seconds at 1080p with higher frame rates and more flexible aspect ratios for short ads, explainers, or B‑roll.

Q5. How does Sora AI compare to tools like Runway, Pika, or Google Veo?

Independent comparison guides rate Sora 2 very strongly on physics, camera motion, and audio sync, which makes complex scenes feel more natural. Runway Gen‑3 offers longer creative control for production workflows, Pika Labs focuses on speed and price, and Veo pushes longer durations, so the right choice depends on budget, clip length, and style needs.

Q6. Can I use Sora AI every day for social media clips and client work?

Daily use works well when Sora AI pairs with ChatGPT: text models handle scripts and hooks, and Sora turns the best ideas into short videos. Pro plans give enough credits and clip length for regular content on platforms like Instagram Reels, TikTok, or YouTube Shorts, as long as projects fit within the 20‑second limit.

Q7. Is Sora AI safe, or should I worry about deepfakes and misuse?

Sora AI includes visible watermarks, content‑credential metadata, and strict rules against realistic child imagery, non‑consensual content, and certain violent scenes. Safety researchers still raise concerns around deepfakes and misinformation, so brands and creators need clear internal rules about topics, disclosure, and review before publishing Sora clips.

Q8. Will Sora AI get longer videos or a new Sora 3 release?

Analysts expect a future Sora 3 version that raises maximum clip length toward 90 seconds or more, improves 4K support, and strengthens character memory across shots. That forecast uses OpenAI’s past rollout timing for GPT‑4 and DALL·E 3 plus public hints from Sora research coverage, so exact dates for a new Sora AI release date window can still shift.

Q9. Can I use Sora AI for client projects and commercial work?

Many early adopters already use Sora AI for ads, product demos, training snippets, and travel or real‑estate mockups, especially under the Pro plan. Before paid work, creators still need to review OpenAI’s usage policies, watermark rules, and local regulations around AI‑generated media, then include that information in client contracts.

Q10. How does Sora AI fit into a normal AI workflow with ChatGPT?

A common pattern starts with ChatGPT for research, outlines, and voice direction, then moves into Sora AI for visual execution once the story feels clear. That split lets text models handle ideas and structure while Sora AI focuses on motion, lighting, and composition tied to a precise script.

Key takeaways on the Sora AI release date

The Sora AI story centers around Sora 2, which is primarily a video tool with amazing features that will be released on 30 September 2025. However, the true Sora AI release date will be a phased rollout, starting with iOS and Web, followed by Android, and then wider Cloud access. Sora 2 is clearly a market leader thanks to its font control, synchronisation with audio, and ability to mimic real-world physics. Users adopting Sora AI will be able to integrate Sora 2 with other video tools to elevate the other services beyond their current offering.

To users with Access to the AI tool, these habits will be more rewarding than waiting on new features. Users are encouraged to enter the system with the verbal or writing prompts interface entering the strategy loop with Sora as a substitute with ChatGPT. The more Sora AI access is used, the greater the chance to receive an account upgrade.